Study on Longform Speech ASR (Liangliang Cao's talk)

04 Mar 2023< 목차 >

본 Post 는 Liangliang Cao 의 Reducing Longform Errors in End2End Speech Recognition 라는 Seminar Talk 을 기반으로 만들어졌습니다.

Motivation

Talk의 Outline 은 다음과 같은데요,

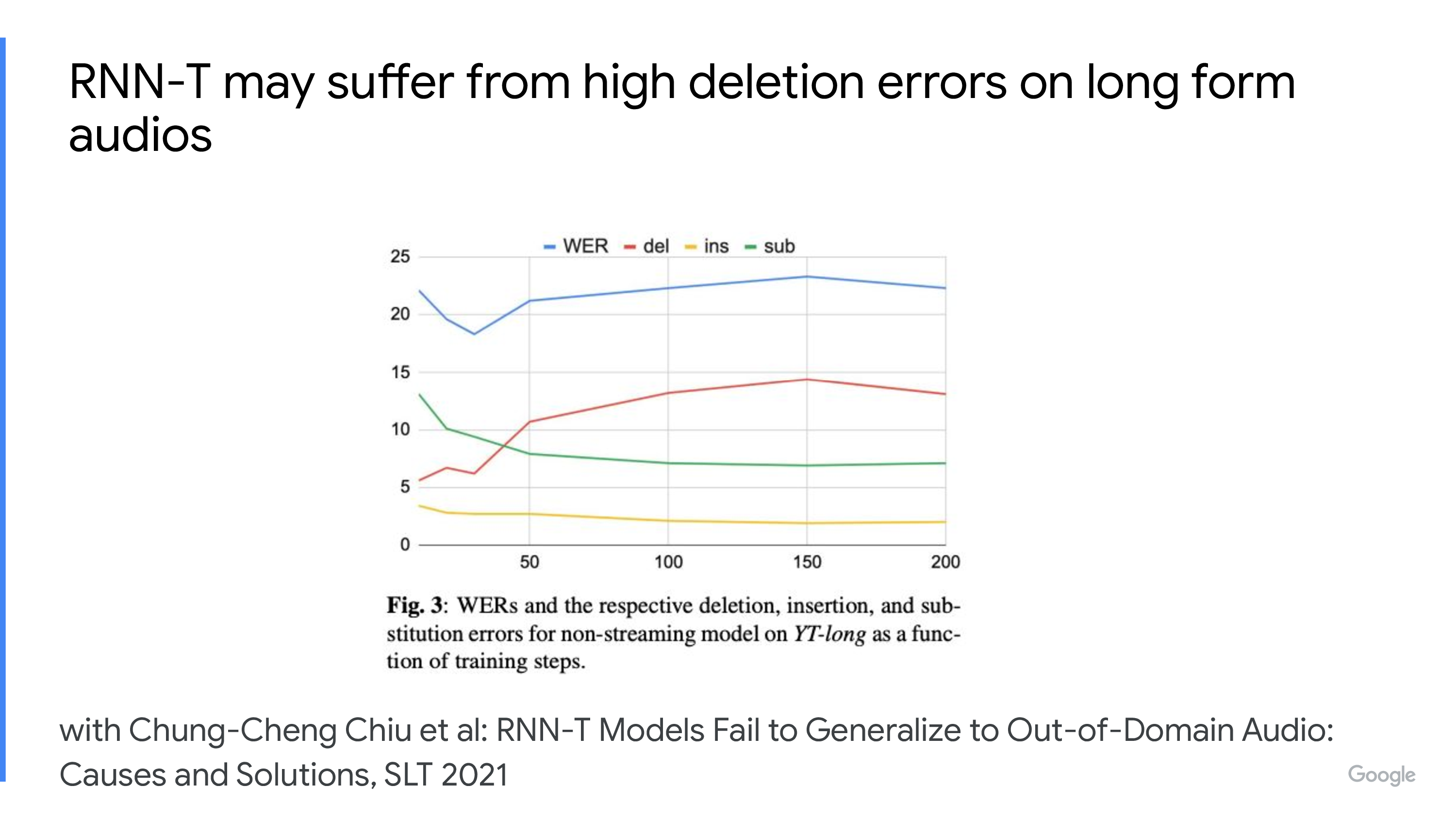

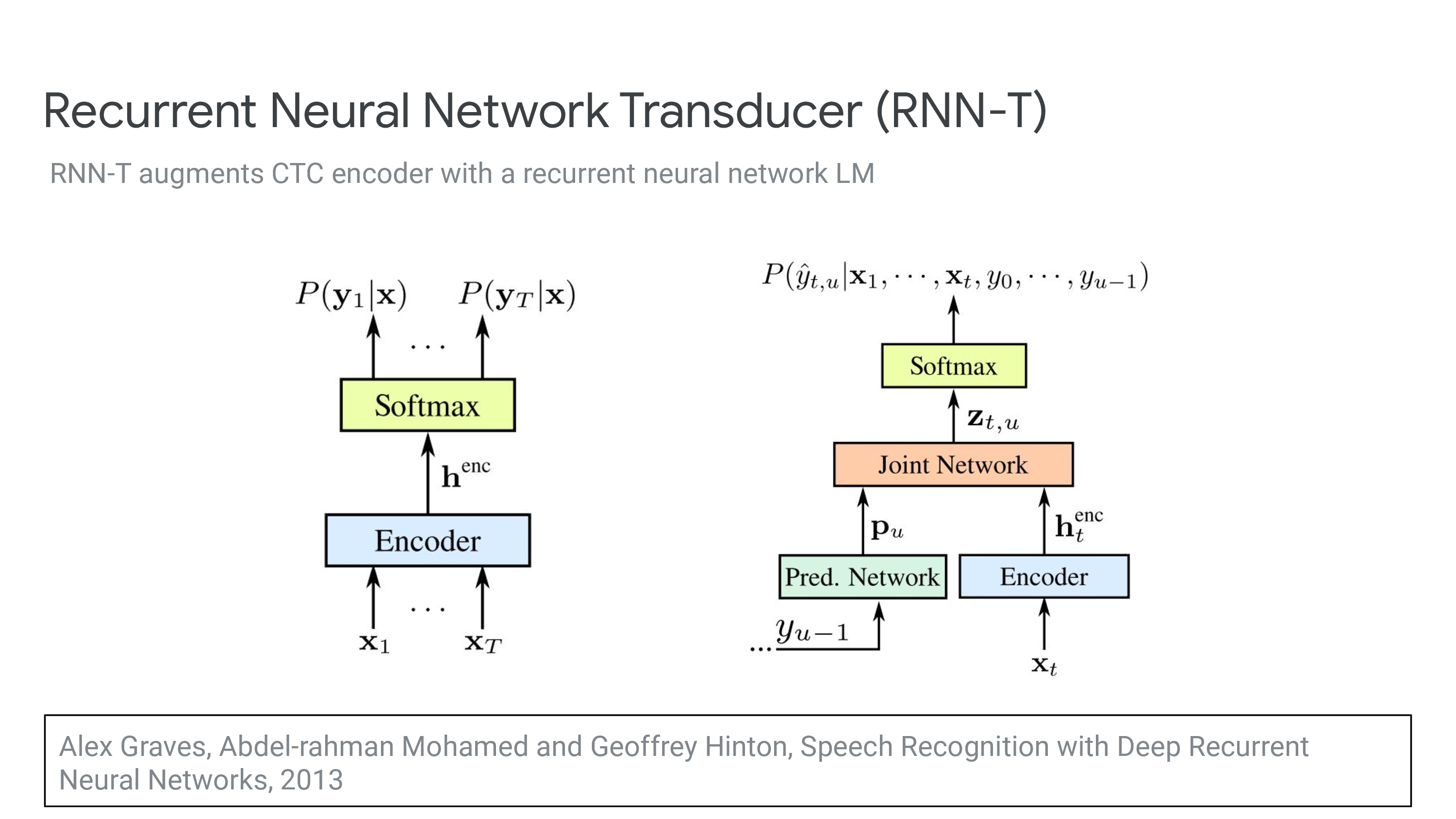

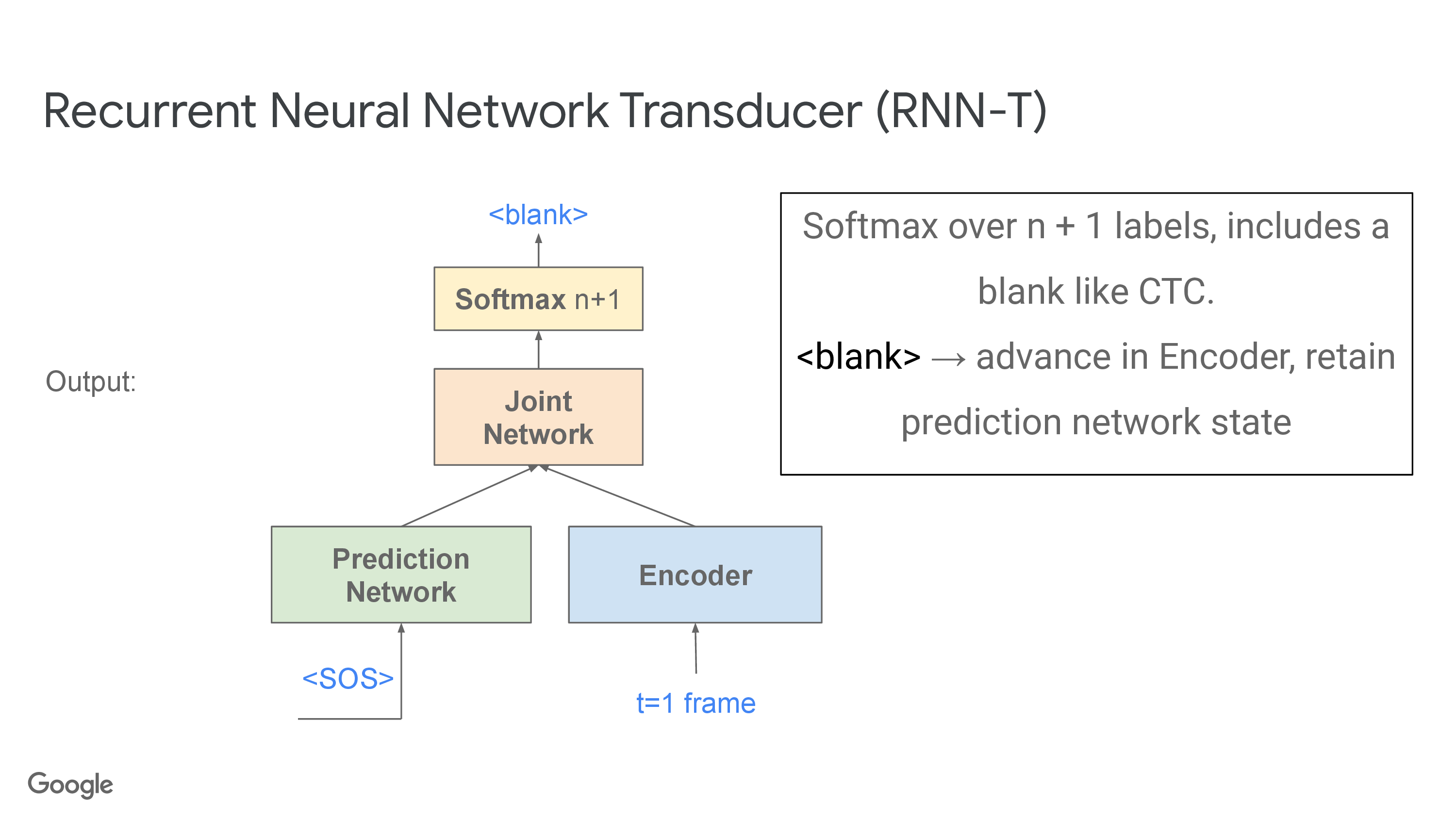

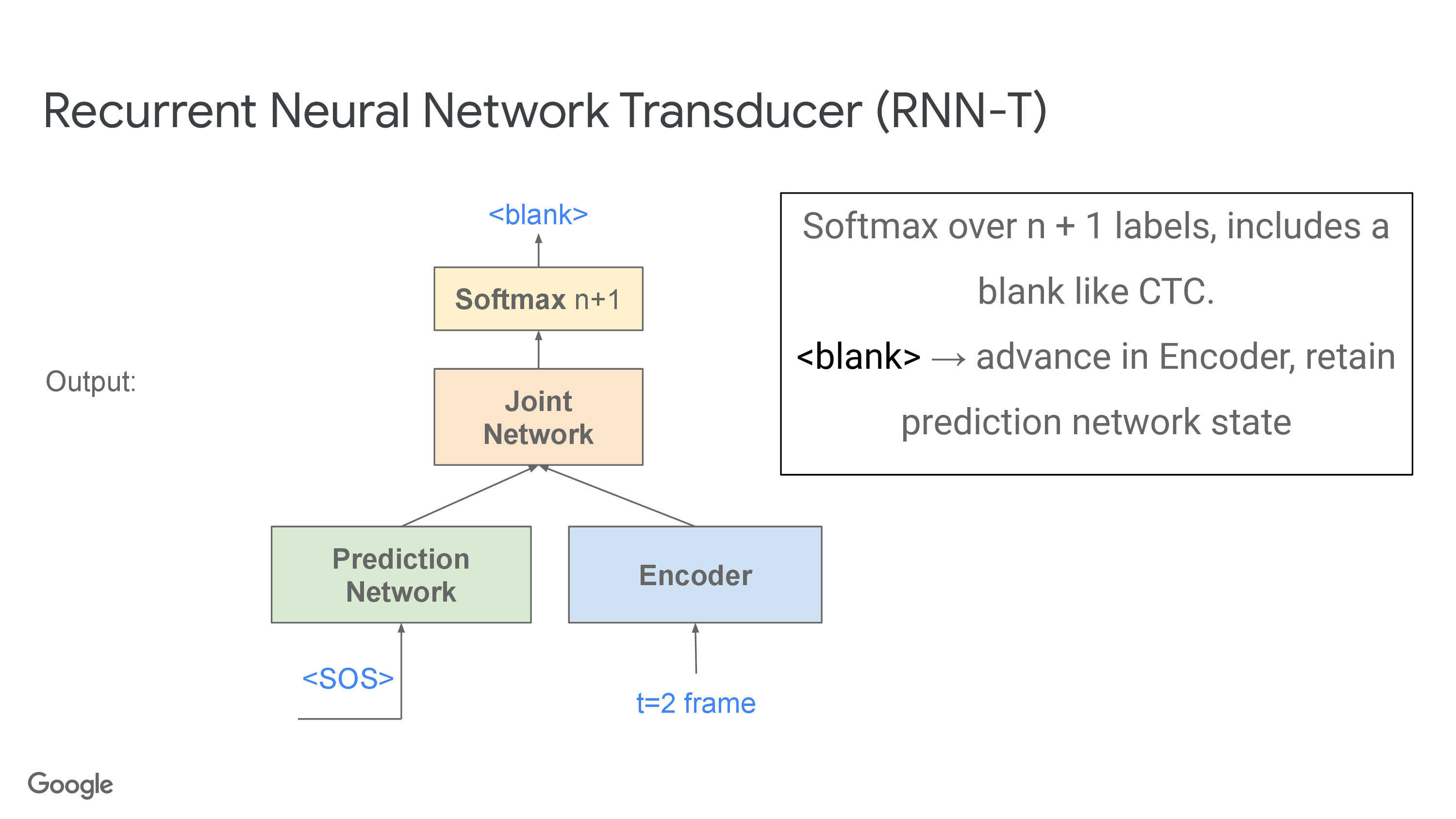

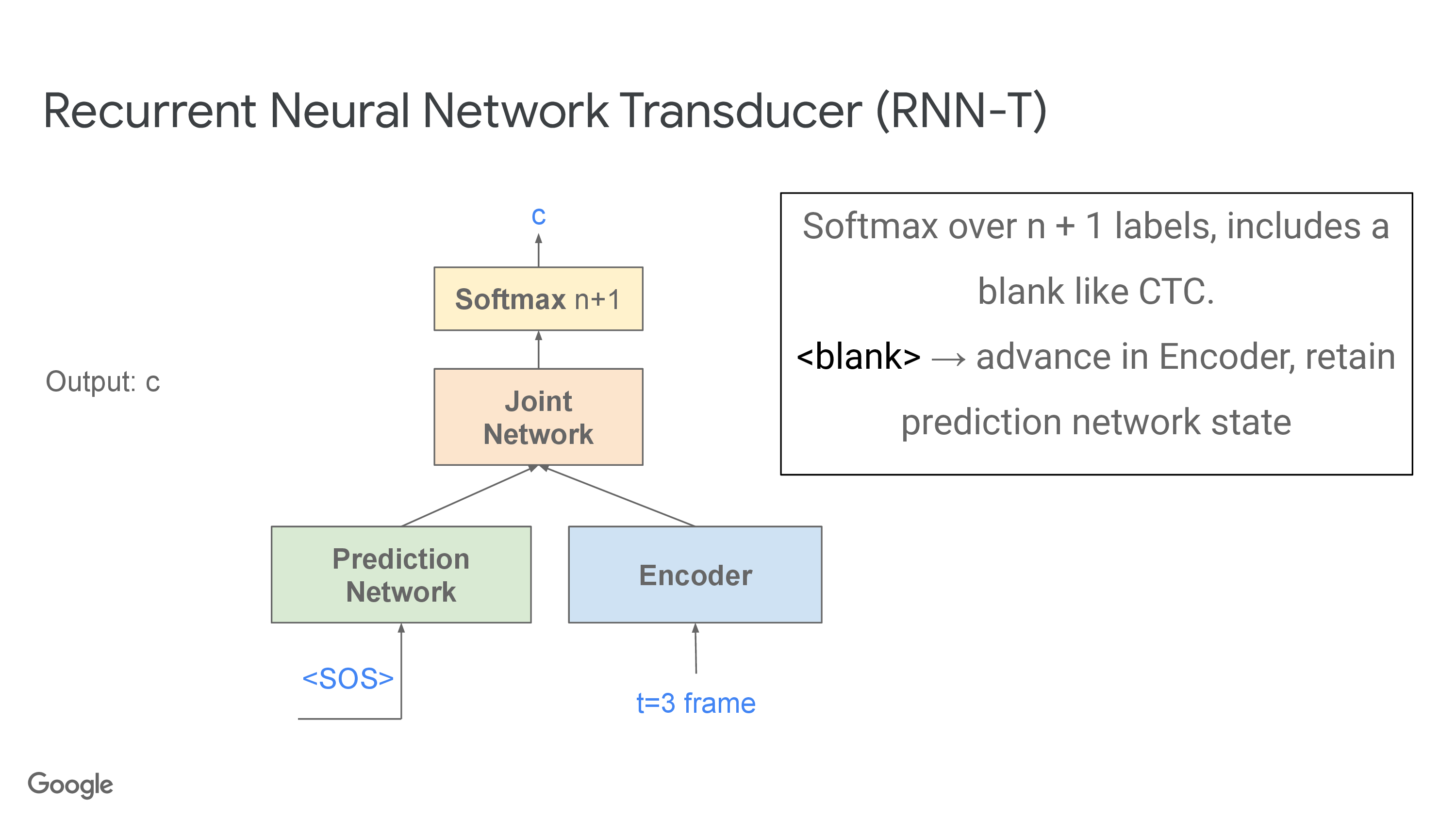

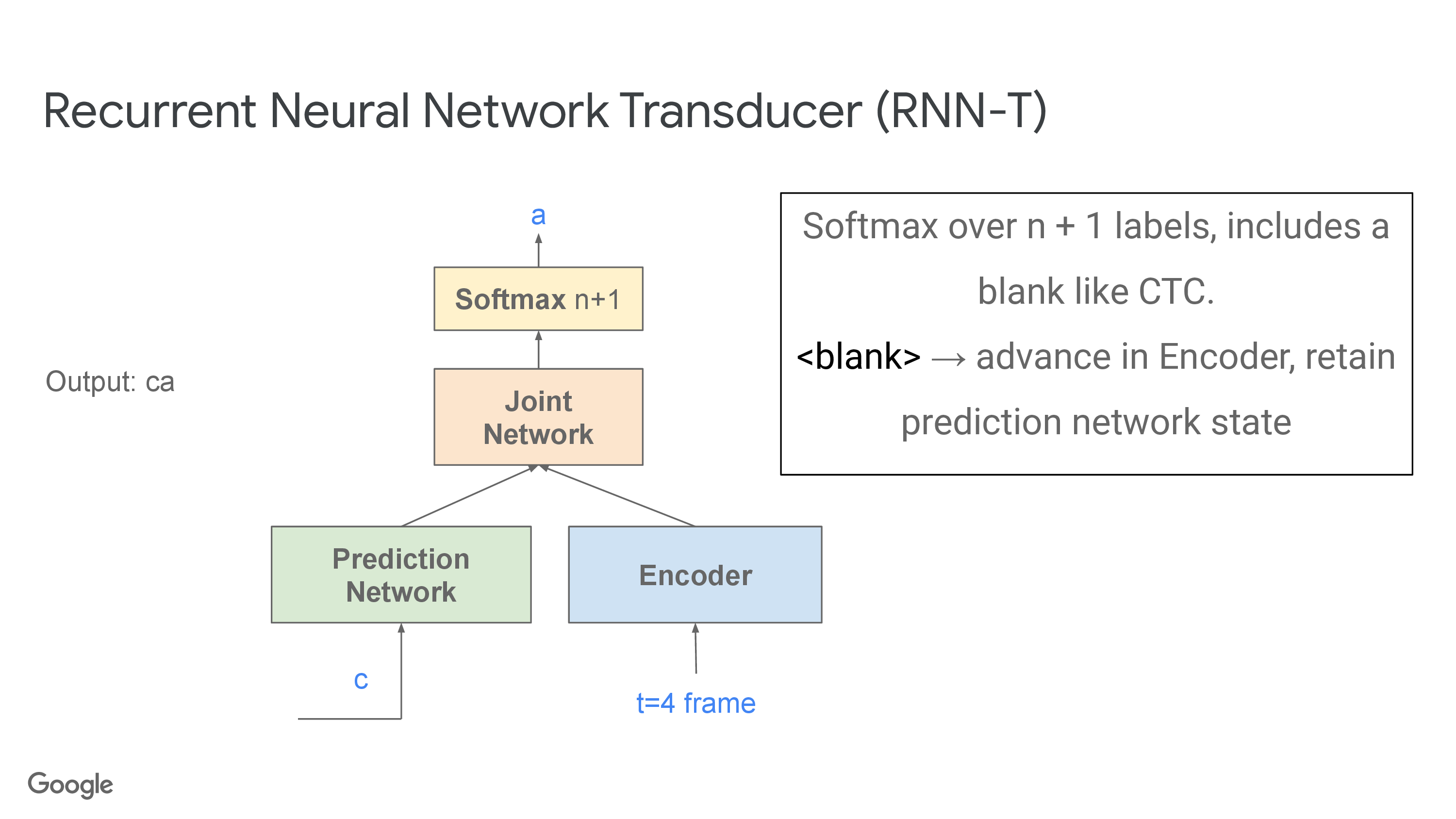

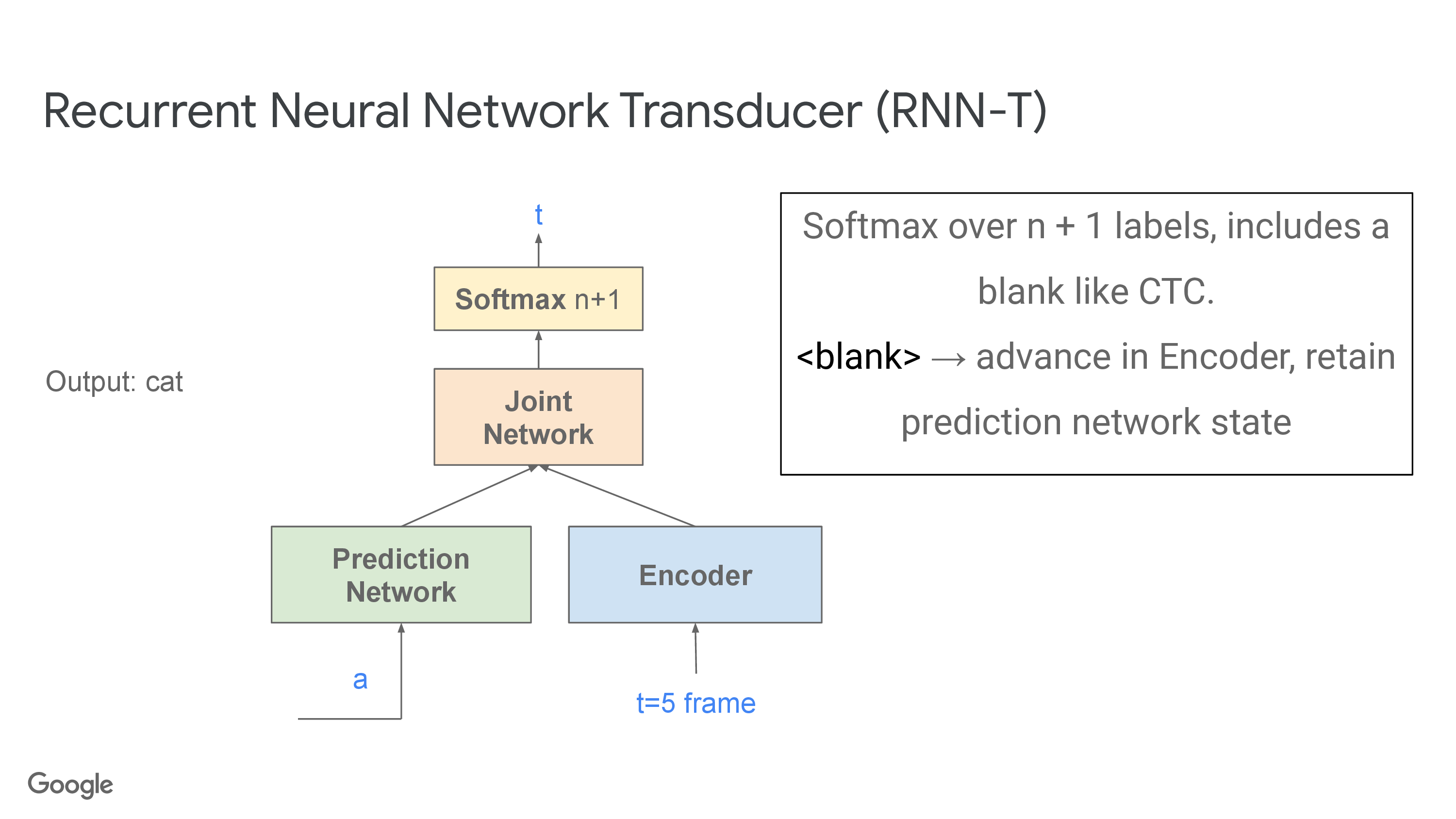

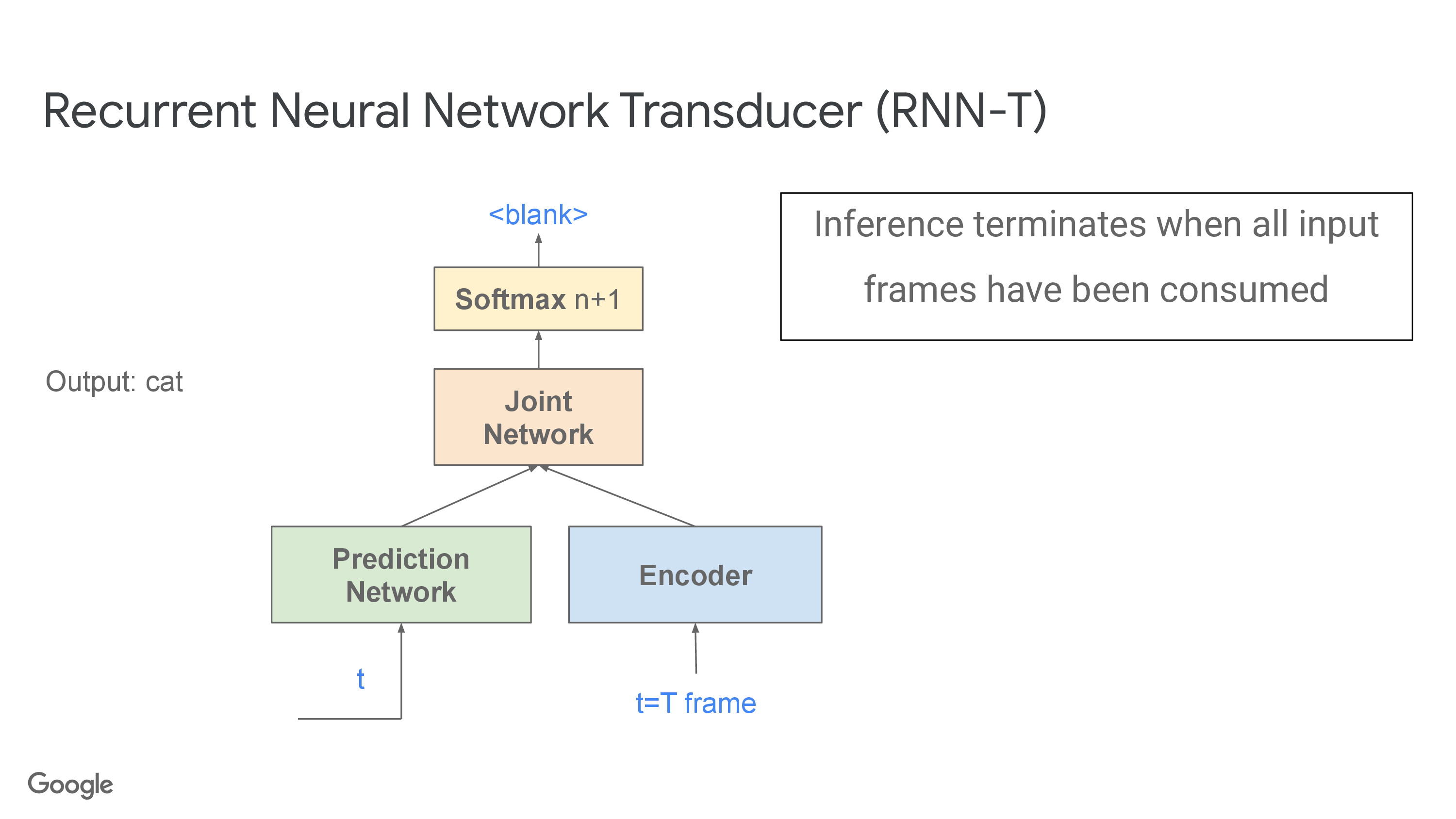

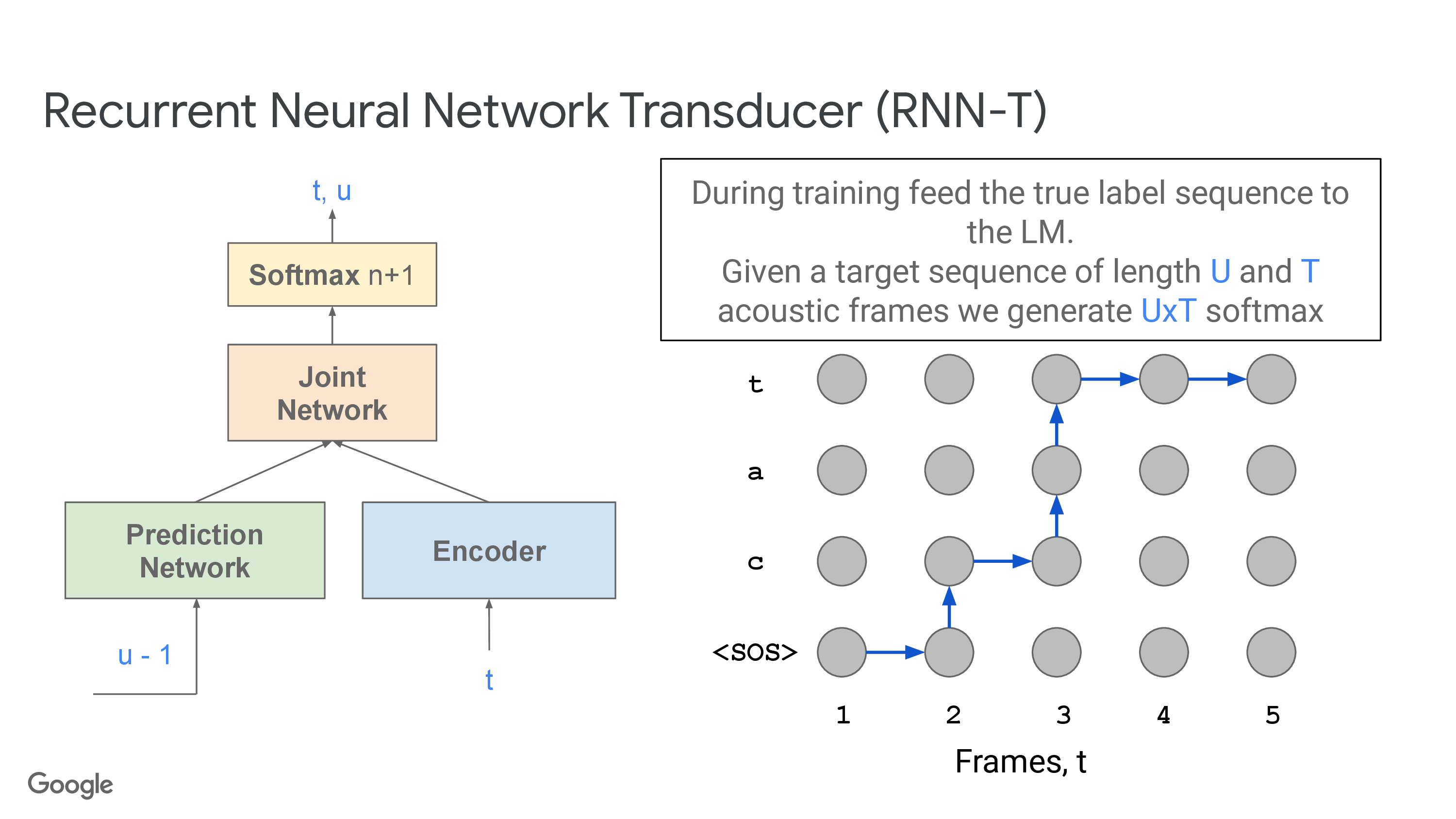

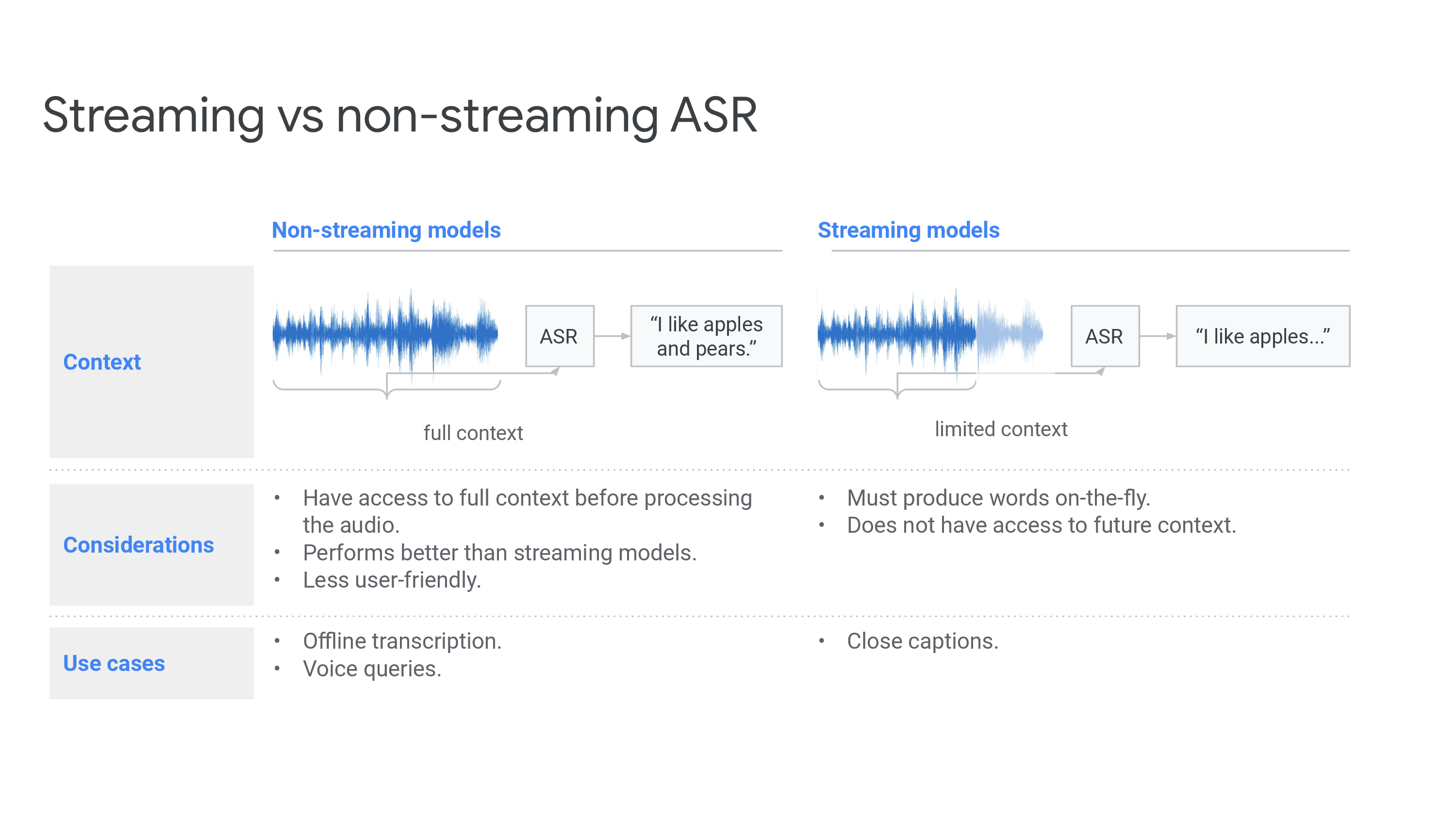

풀고자 하는 문제는 긴 음성이 들어왔을 때 RNN-T 같은 경우 Deletion Error 가 많이 난다던가 하는 문제를 보이는 것입니다. 이를 해결하기 위해 End-to-End ASR 모델들이 각각 얼마나 긴 음성에 취약한지 등을 분석하는 내용입니다.

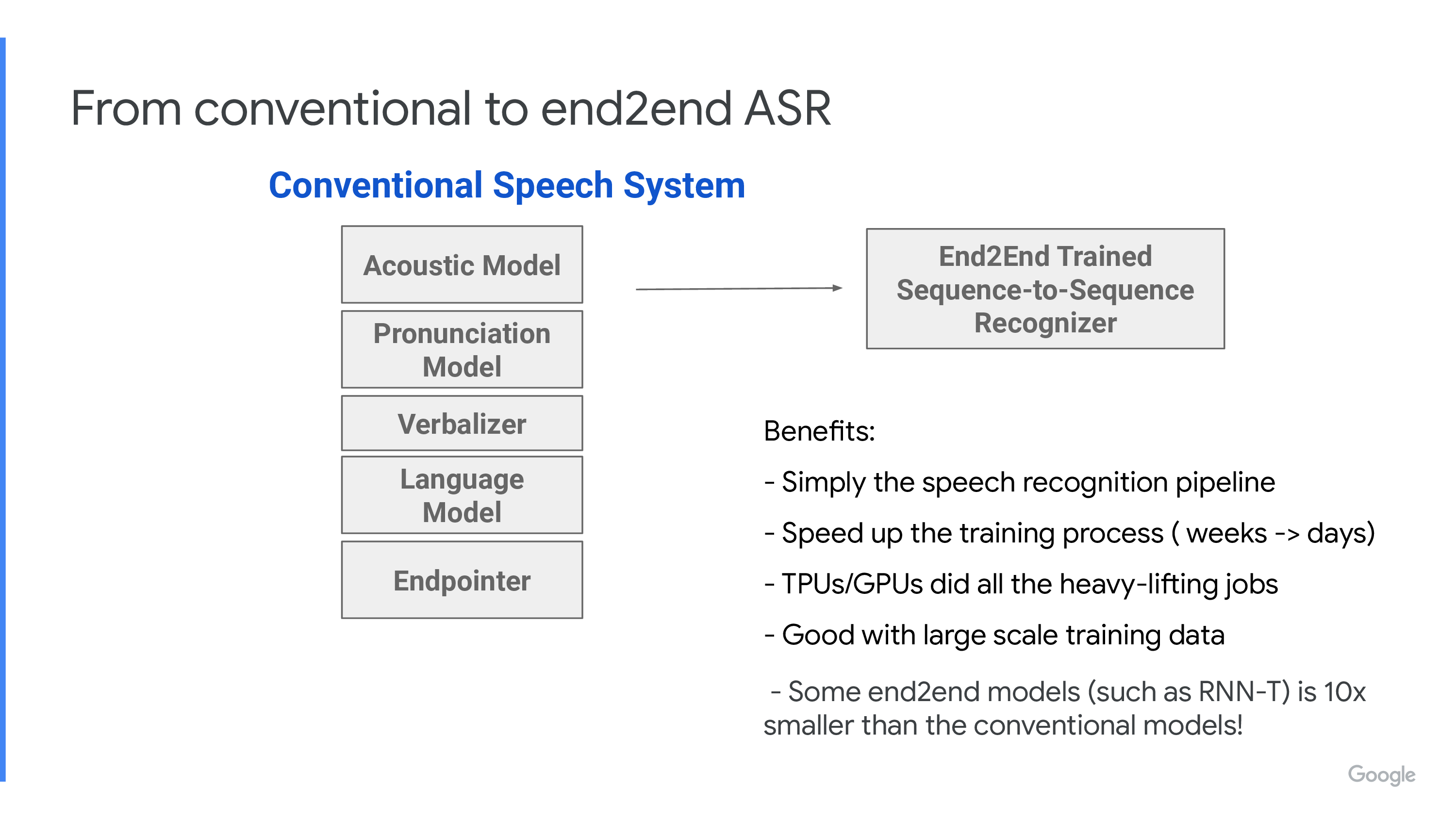

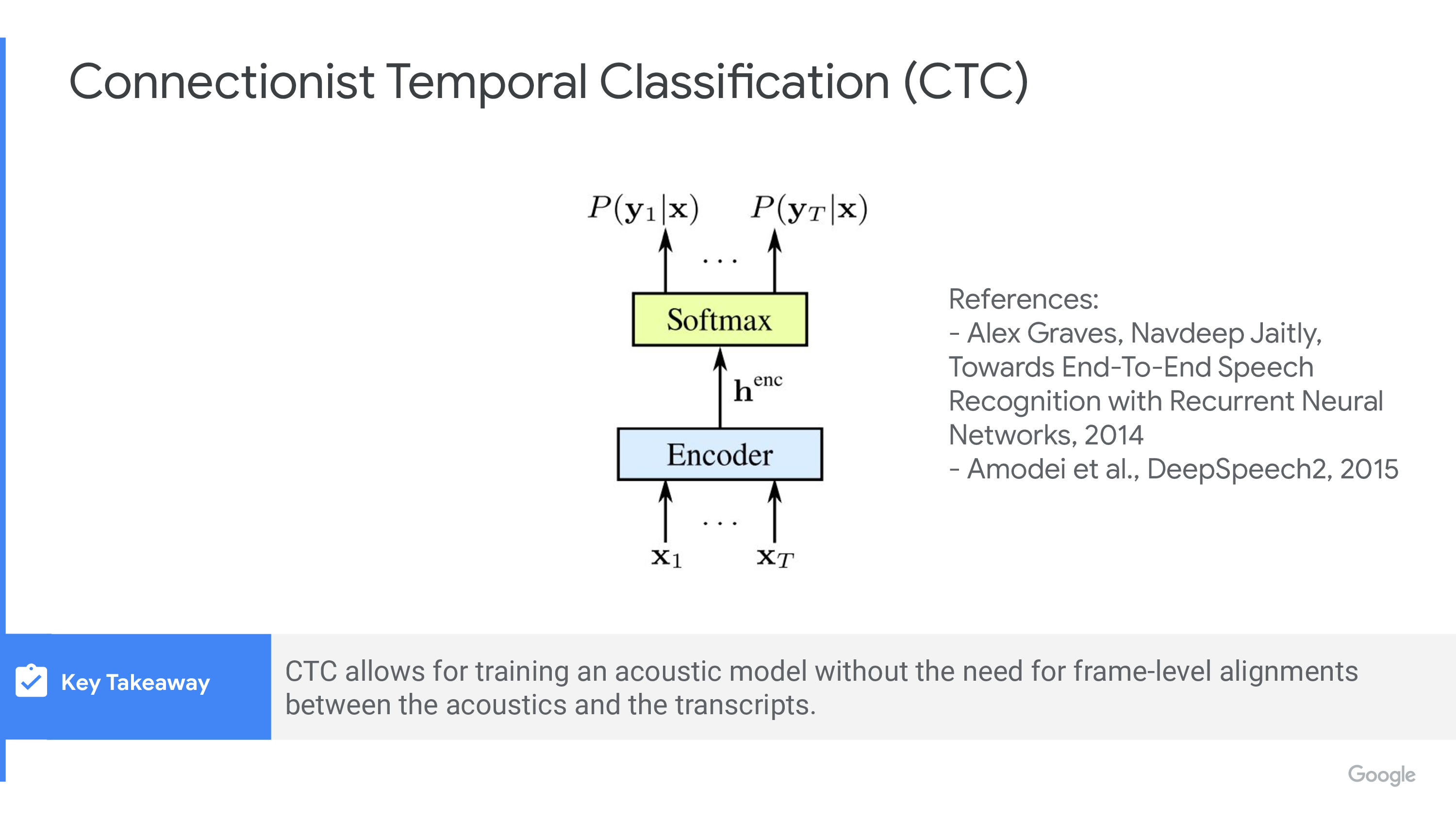

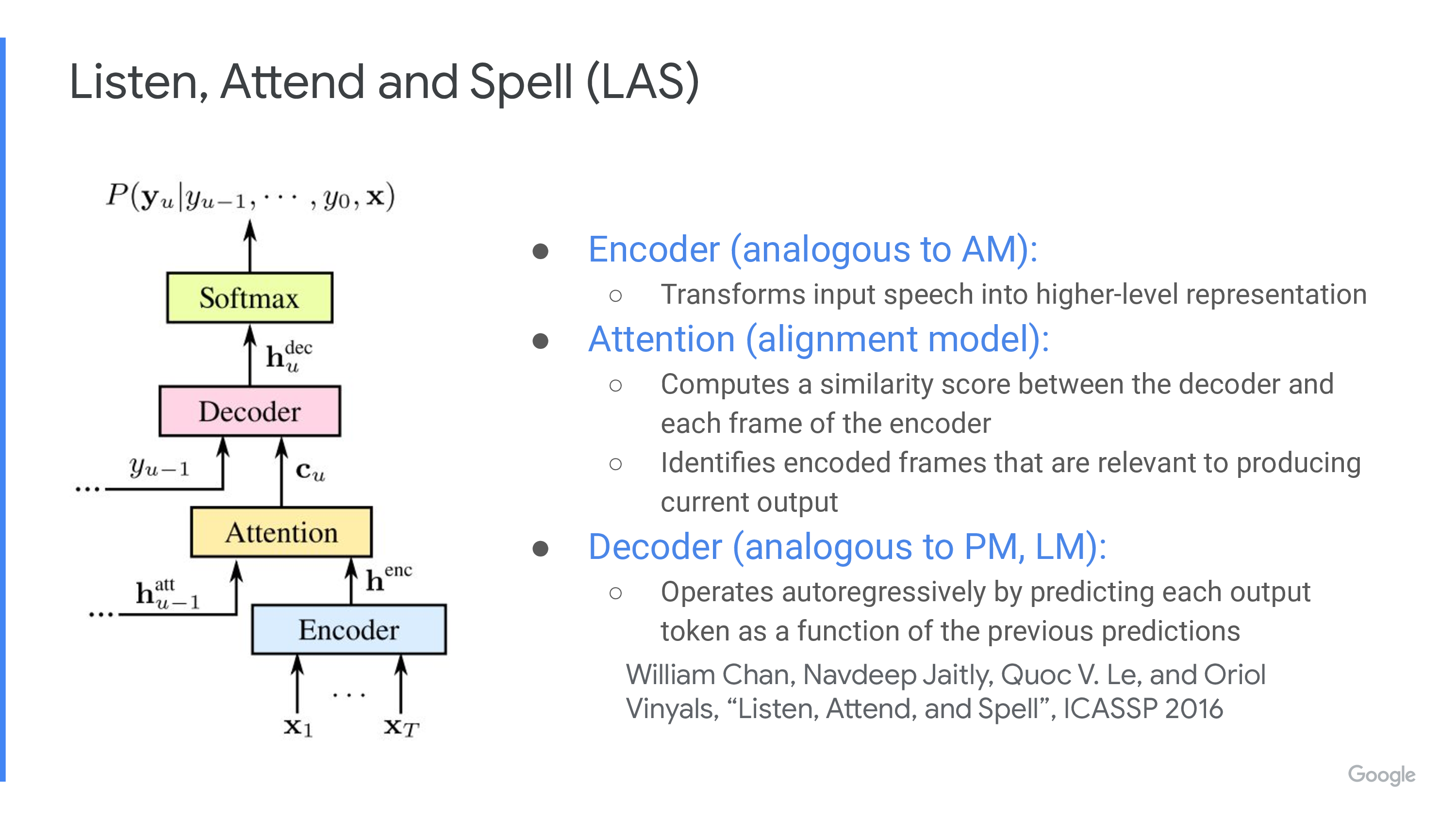

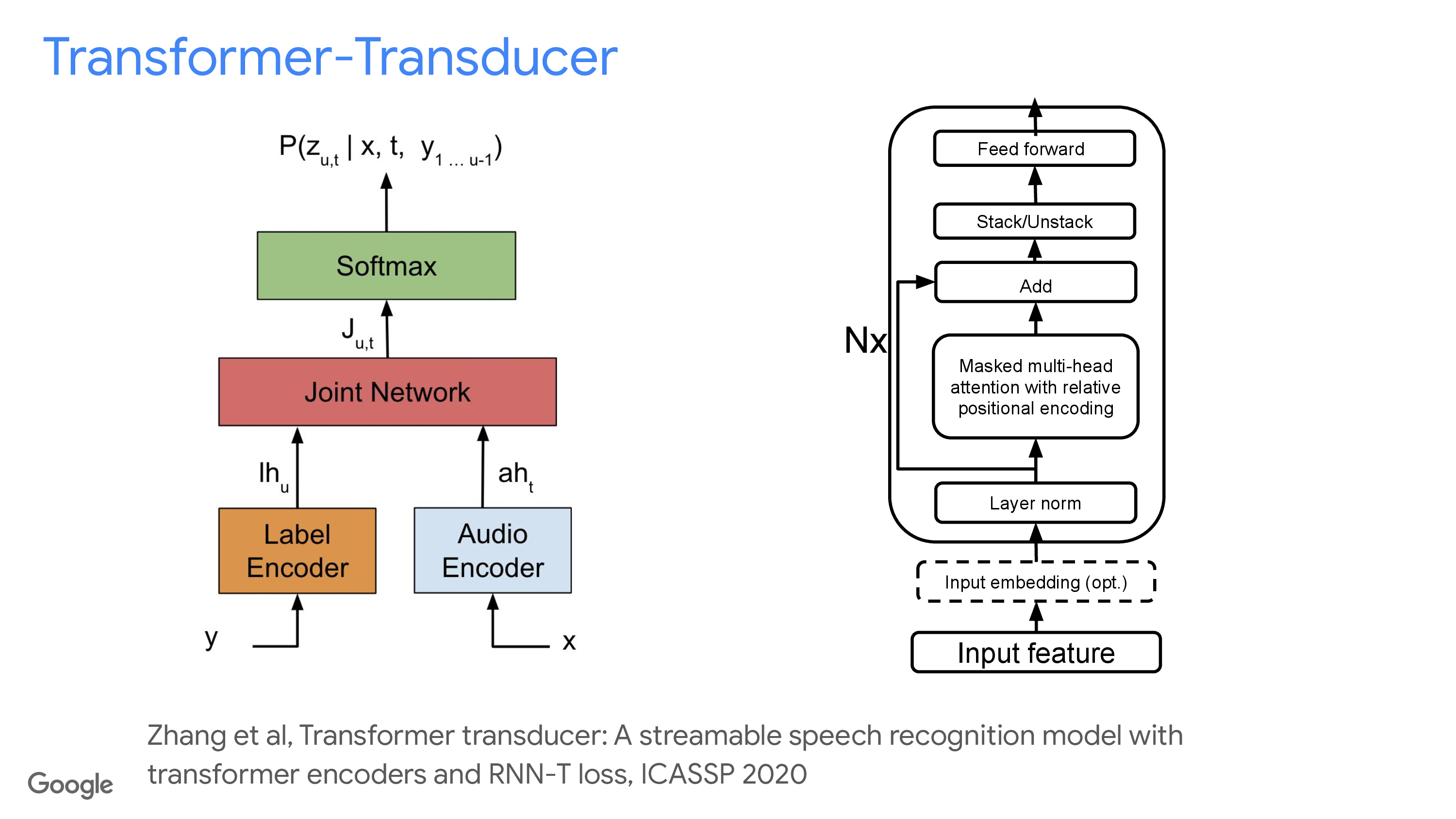

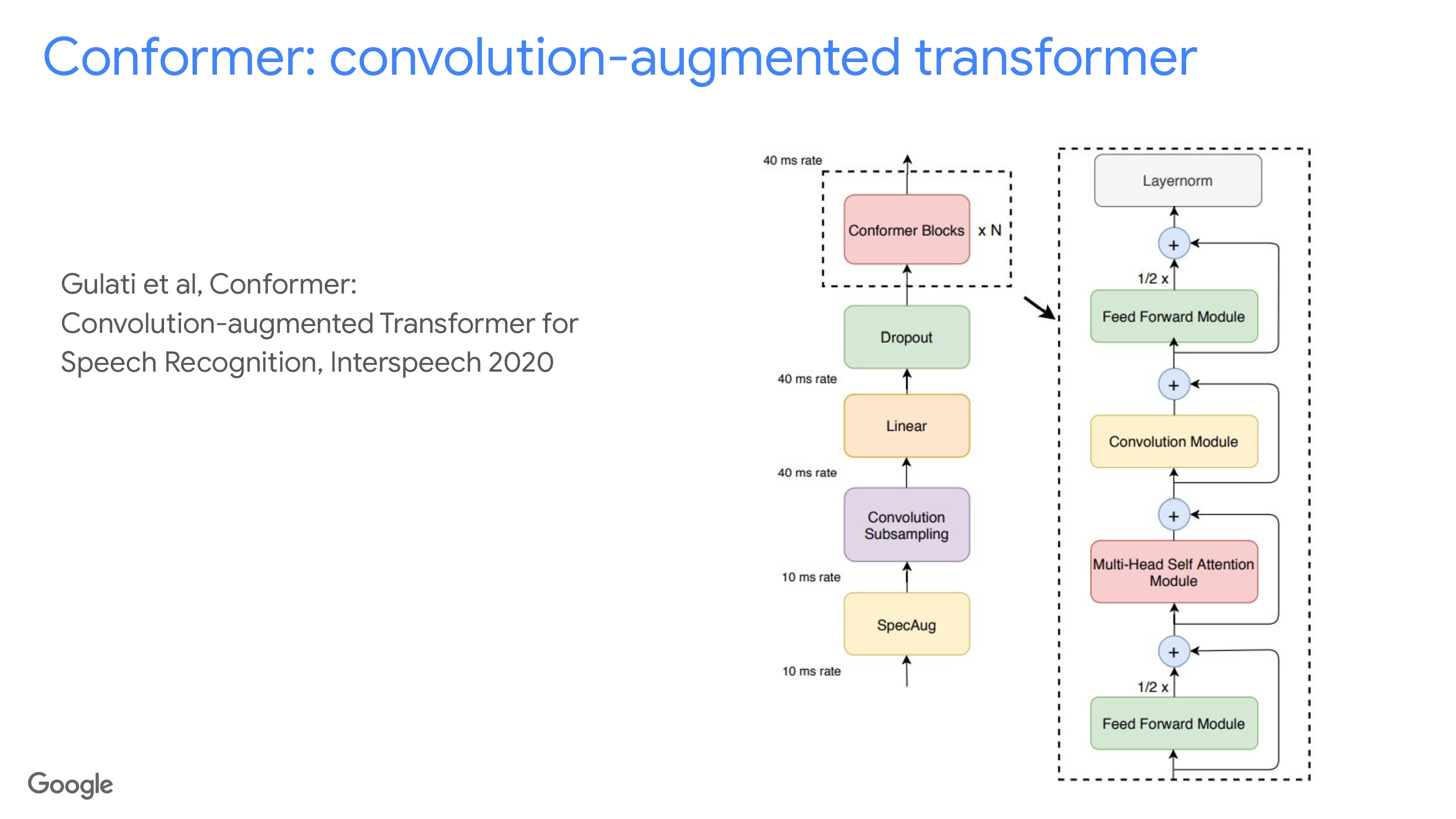

CTC, RNN-T 등을 사용해 디자인한 End-to-End ASR Method 는 간단히만 설명하고 넘어가도록 하겠습니다.

Fig. Conventional ASR System 과 비교해서 모든 AM, LM 등의 모듈을 한번에 학습할 수 있기 때문에 더 간단하고 빠르게 학습할 수 있는게 장점이며, 모델 크기도 더 작은것이 일반적.

Fig. Conventional ASR System 과 비교해서 모든 AM, LM 등의 모듈을 한번에 학습할 수 있기 때문에 더 간단하고 빠르게 학습할 수 있는게 장점이며, 모델 크기도 더 작은것이 일반적.

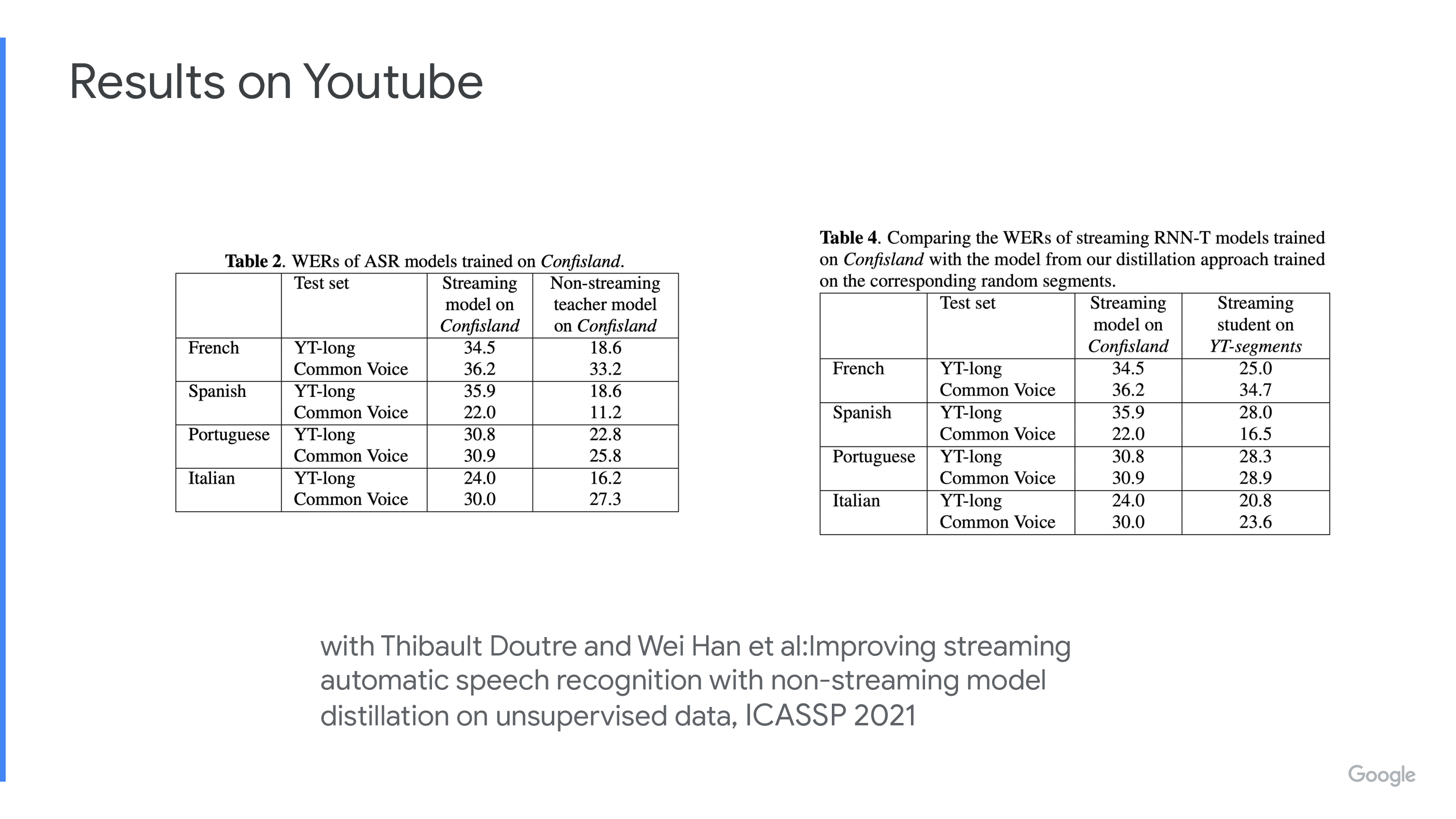

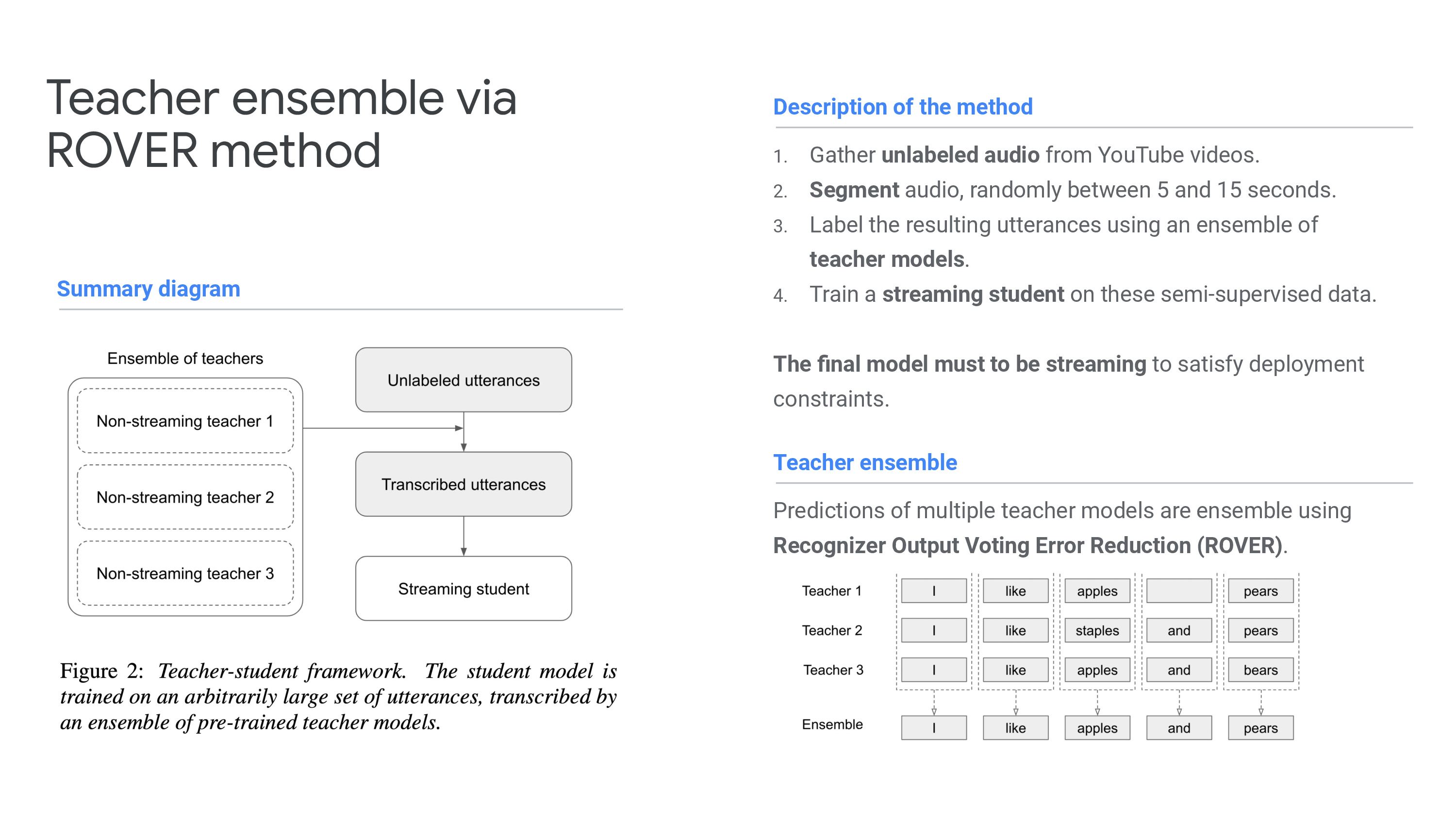

Teacher Distillation on Youtube Data

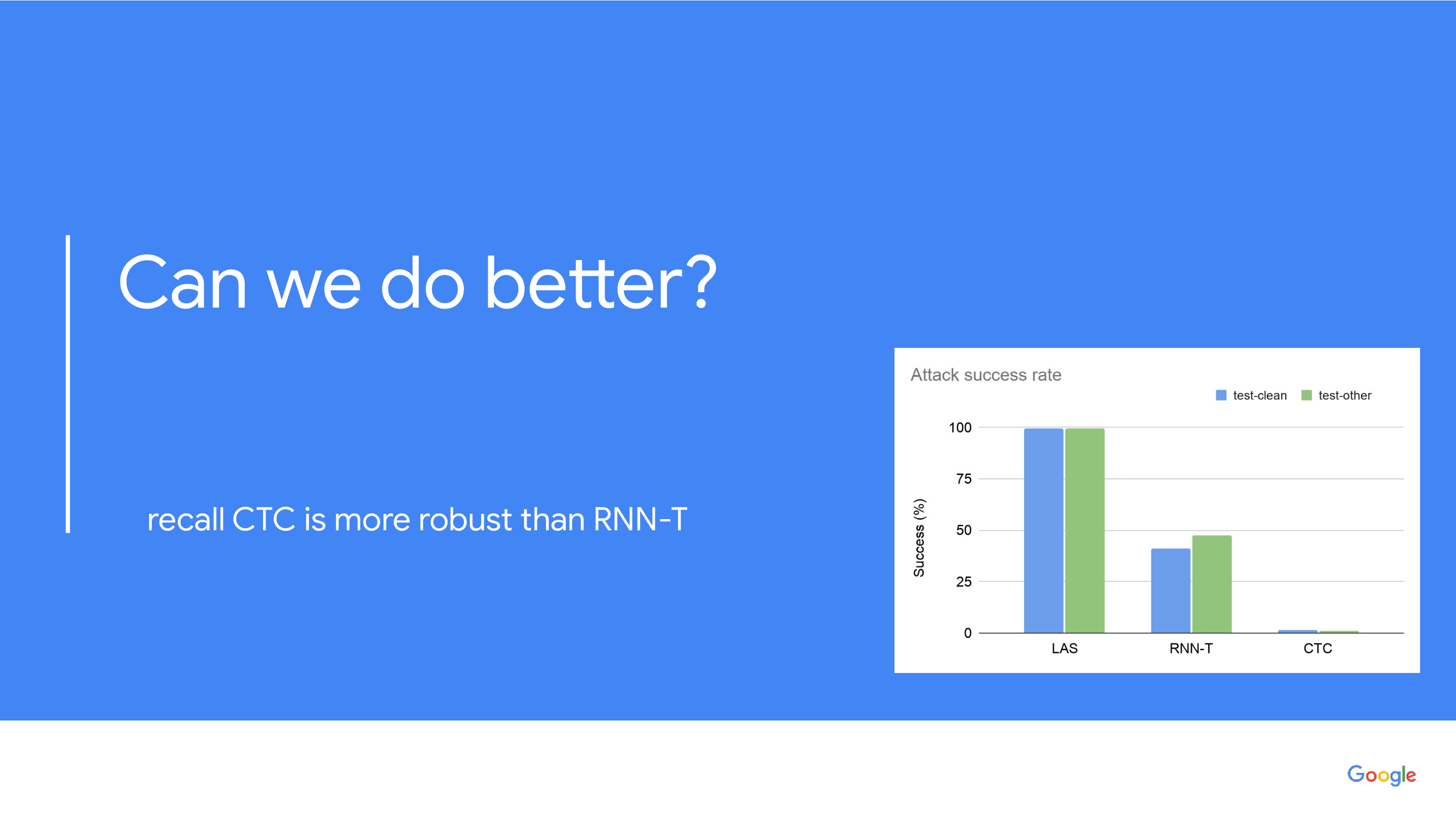

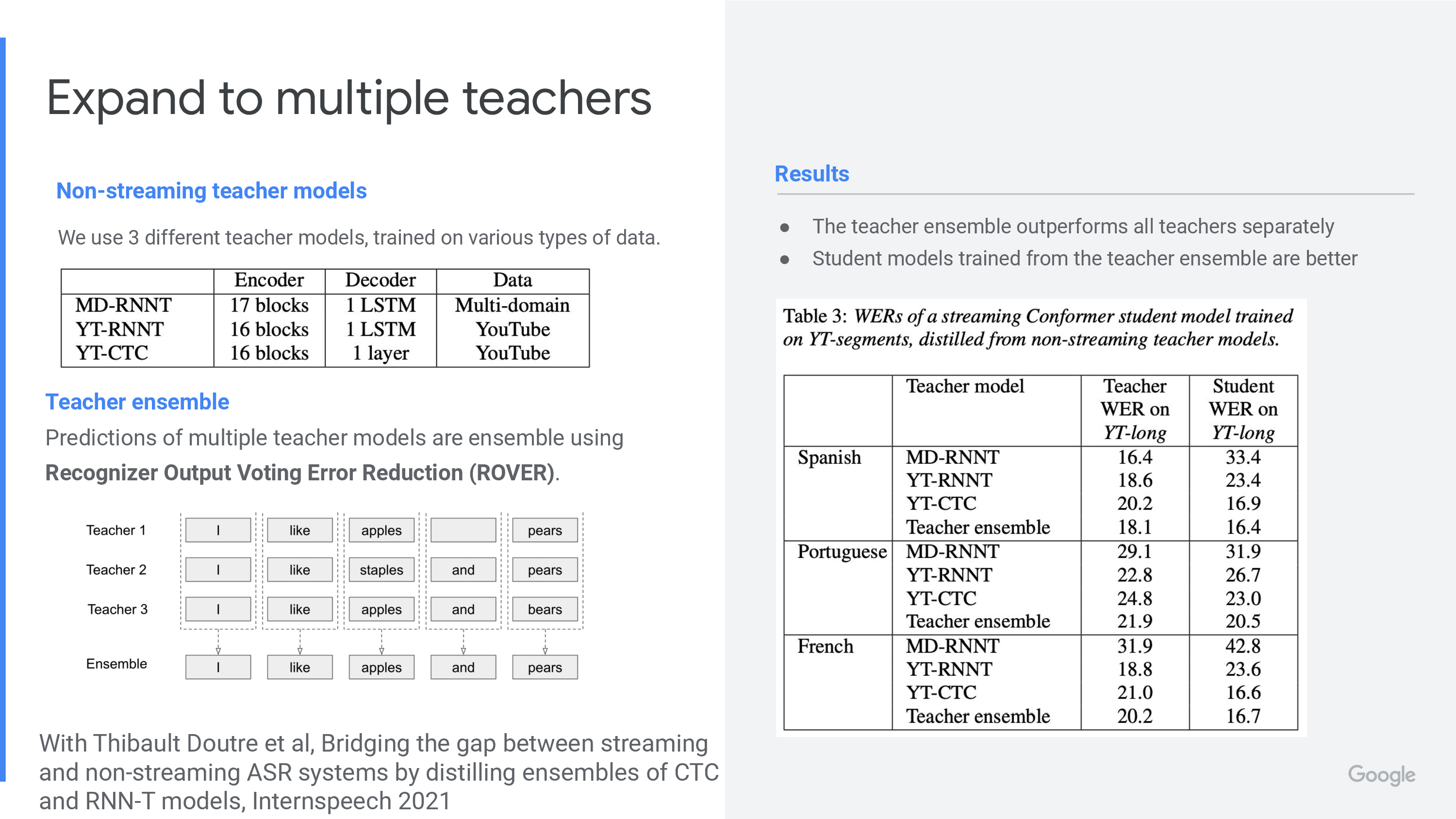

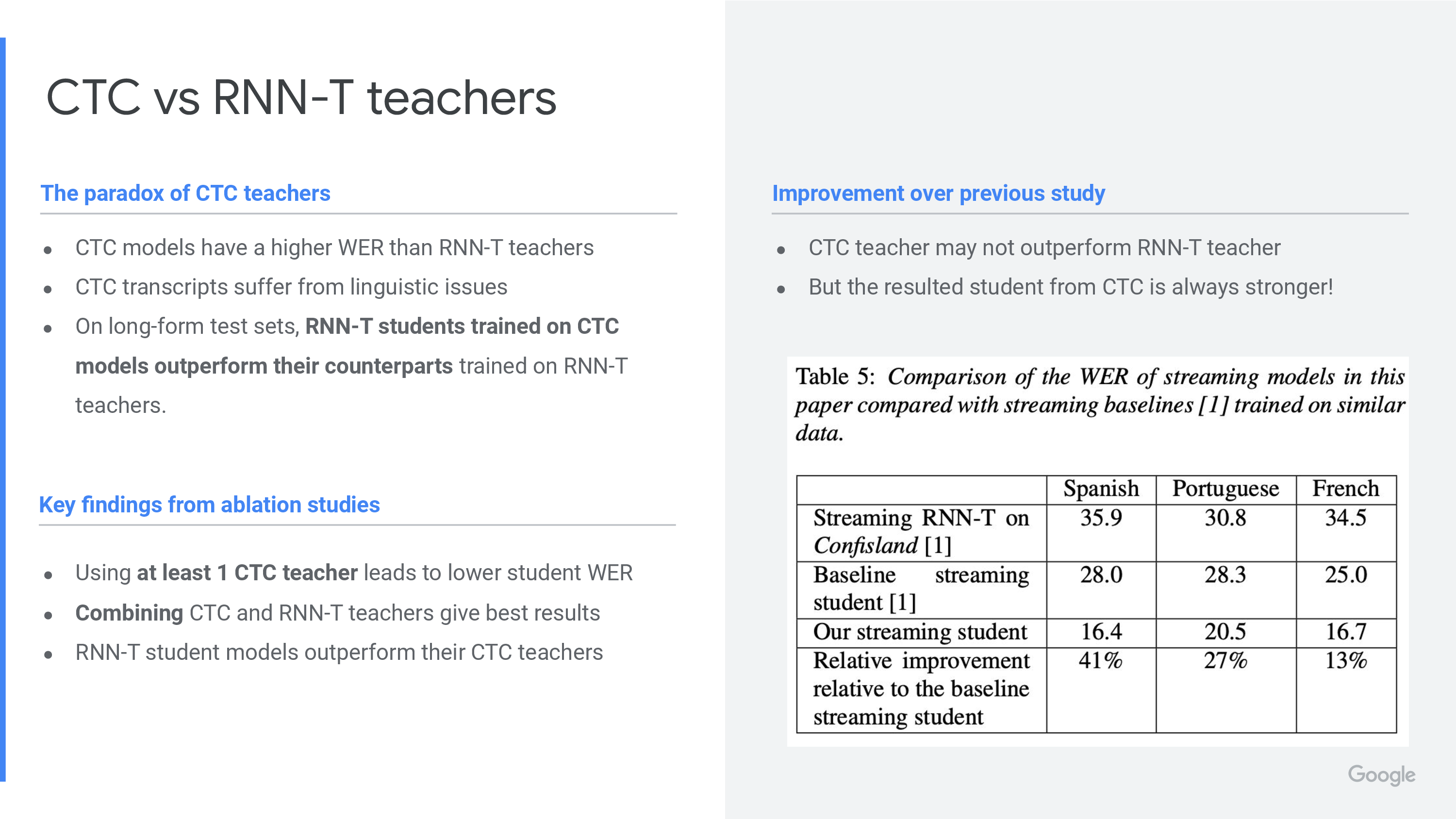

Combining CTC and RNN-T Models

References

- Reducing Longform Errors in End2End Speech Recognition from Liangliang Cao

- Video

- [Slide]](http://llcao.net/paper/Talk_at_Rutgers_04052022.pdf)