(WIP) Rethinking Conventional Wisdom from the LLM perspective

28 Sep 2024< 목차 >

- tmp

- (23th Sep 2024) Rethinking Conventional Wisdom in Machine Learning: From Generalization to Scaling

- References

tmp

(23th Sep 2024) Rethinking Conventional Wisdom in Machine Learning: From Generalization to Scaling

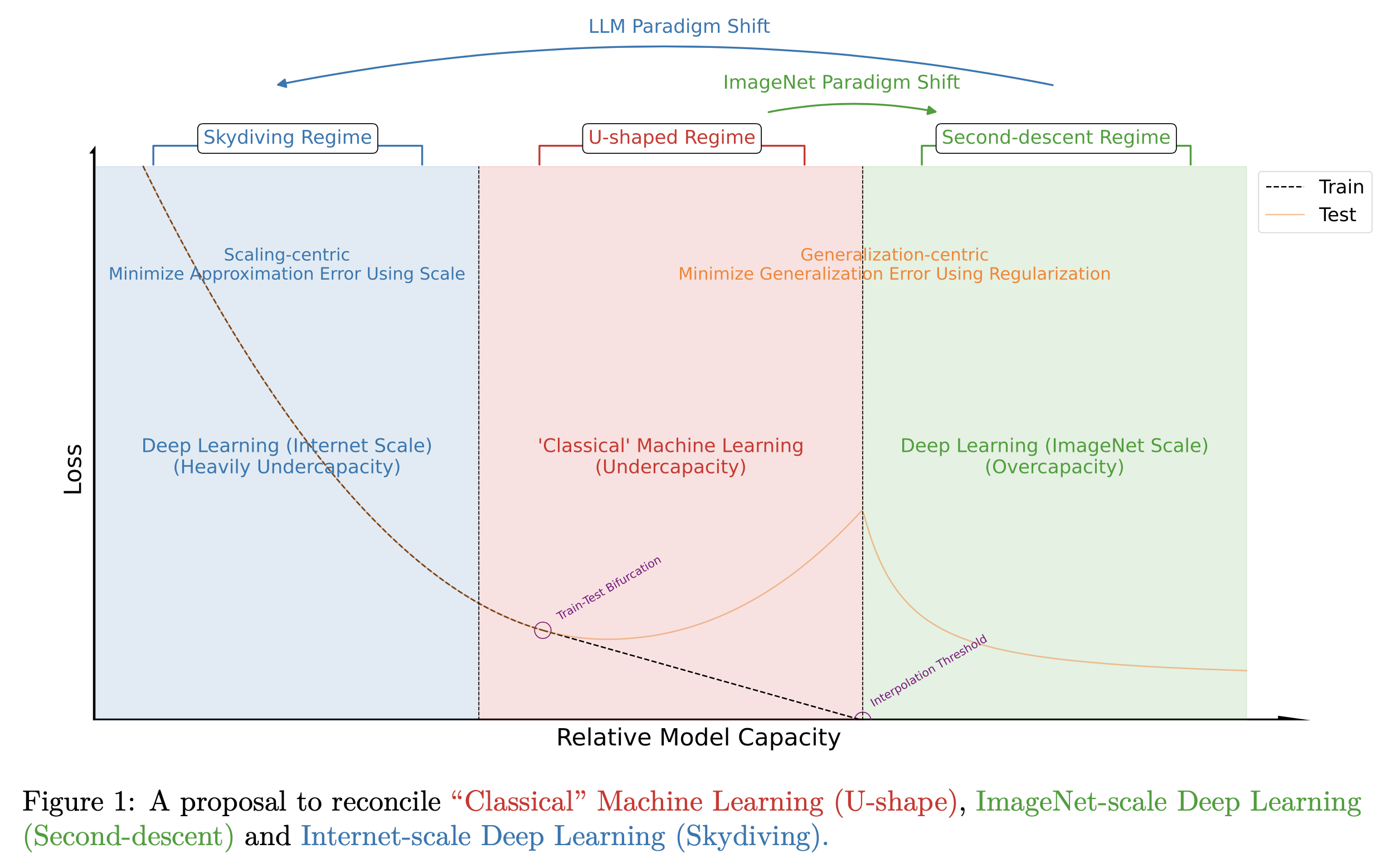

Fig.

Fig.

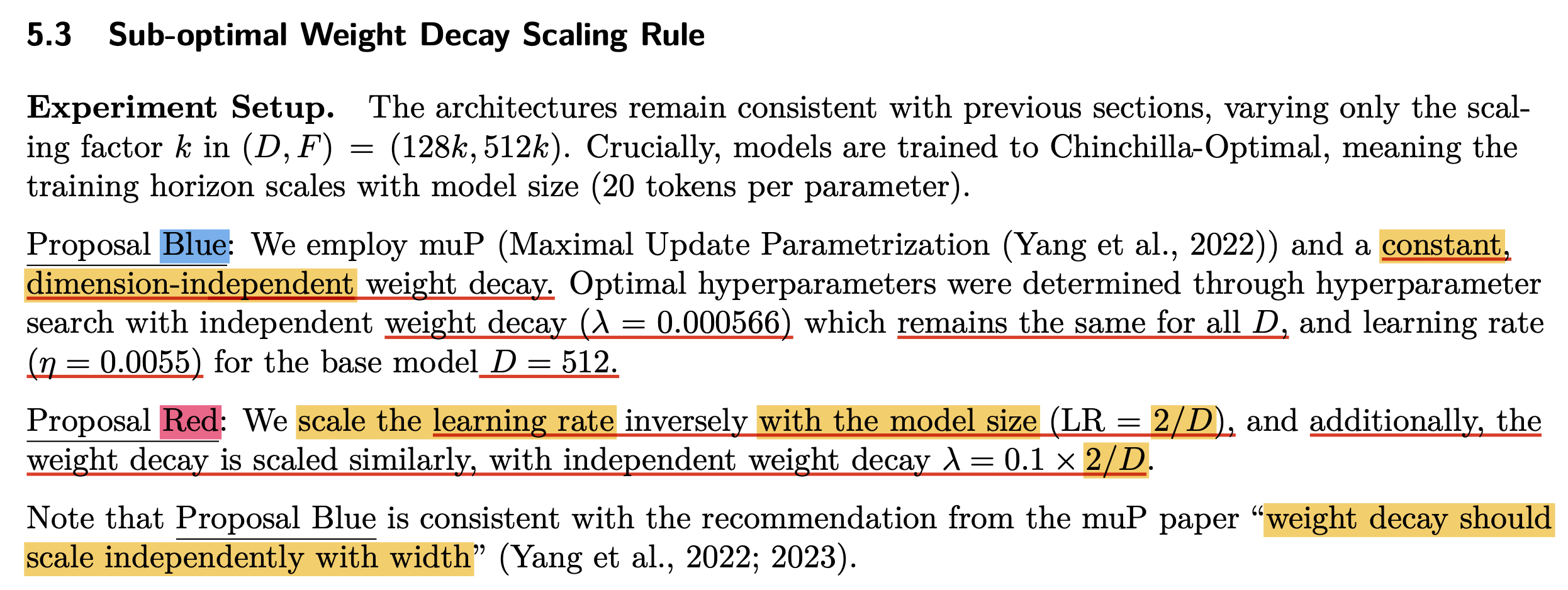

Weight Decay (and muP)

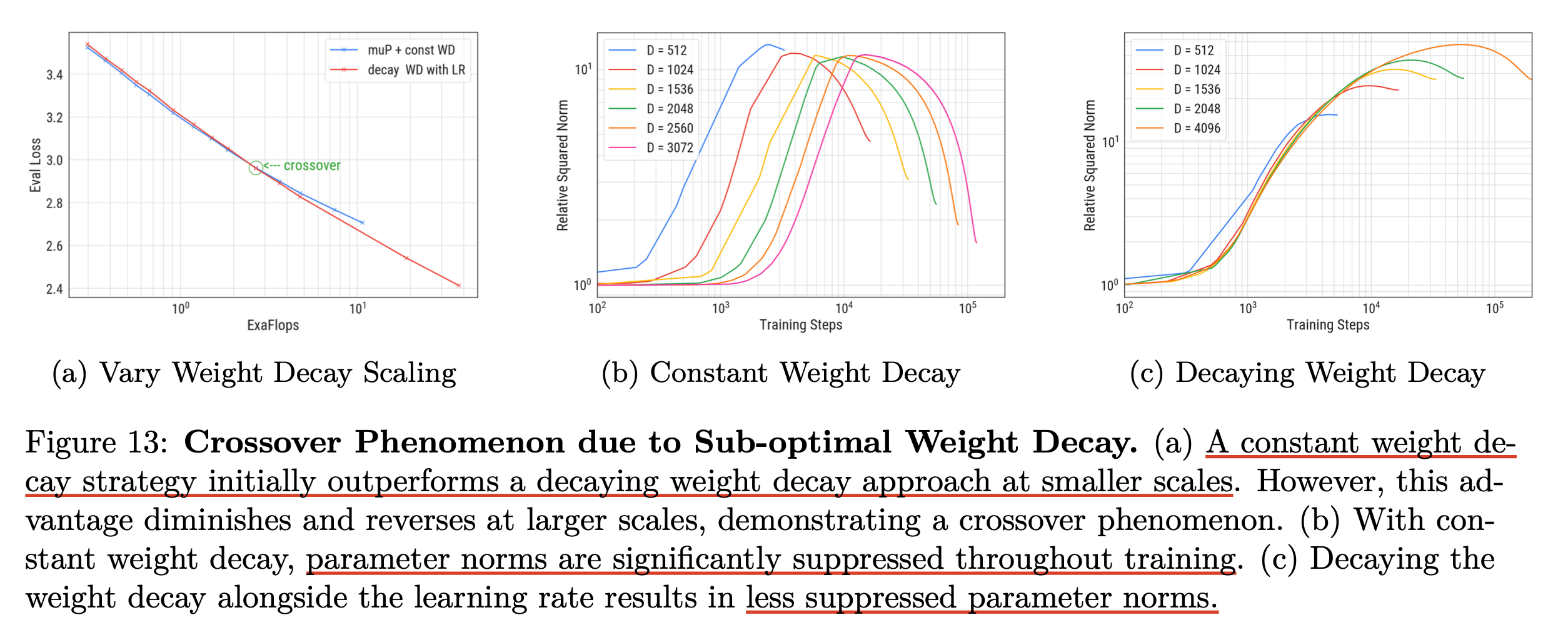

Fig.

Fig.

Fig.

Fig.

References

- Papers

- tmp

- tmp 2

- Weight decay exclusions from minGPT issues

- Why not perform weight decay on layernorm/embedding?

- Exploring Weight Decay in Layer Normalization: Challenges and a Reparameterization Solution from Ohad Rubin

- When should you not use the bias in a layer?

- L2 Regularization versus Batch and Weight Normalization

- L2 Regularization and Batch Norm