Model Interpretability with Integrated Gradients

29 Mar 2023< 목차 >

Motivation

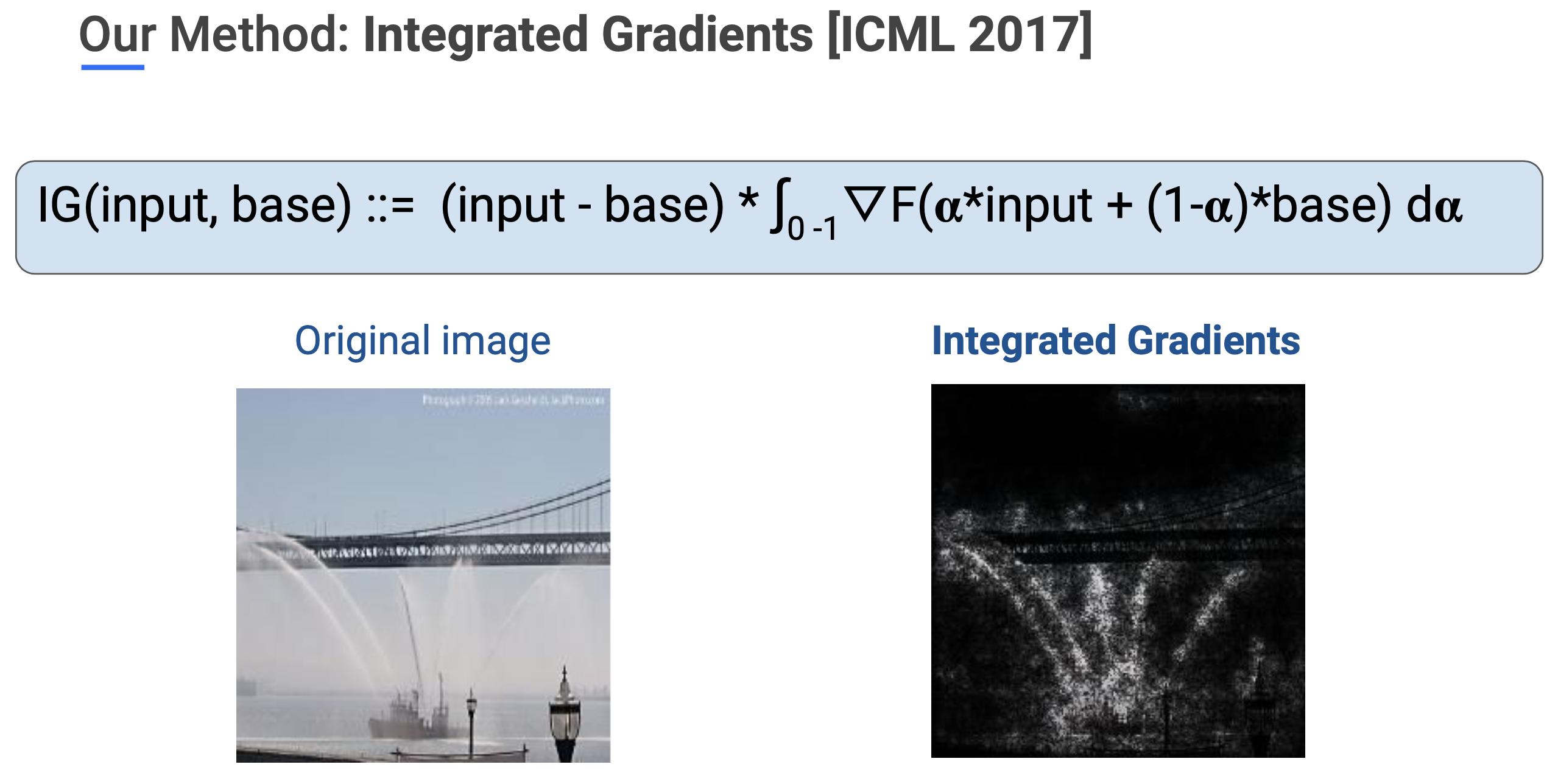

Ingegrated Gradients (IG)

Integrated Gradients (IG) 란 무엇일까요?

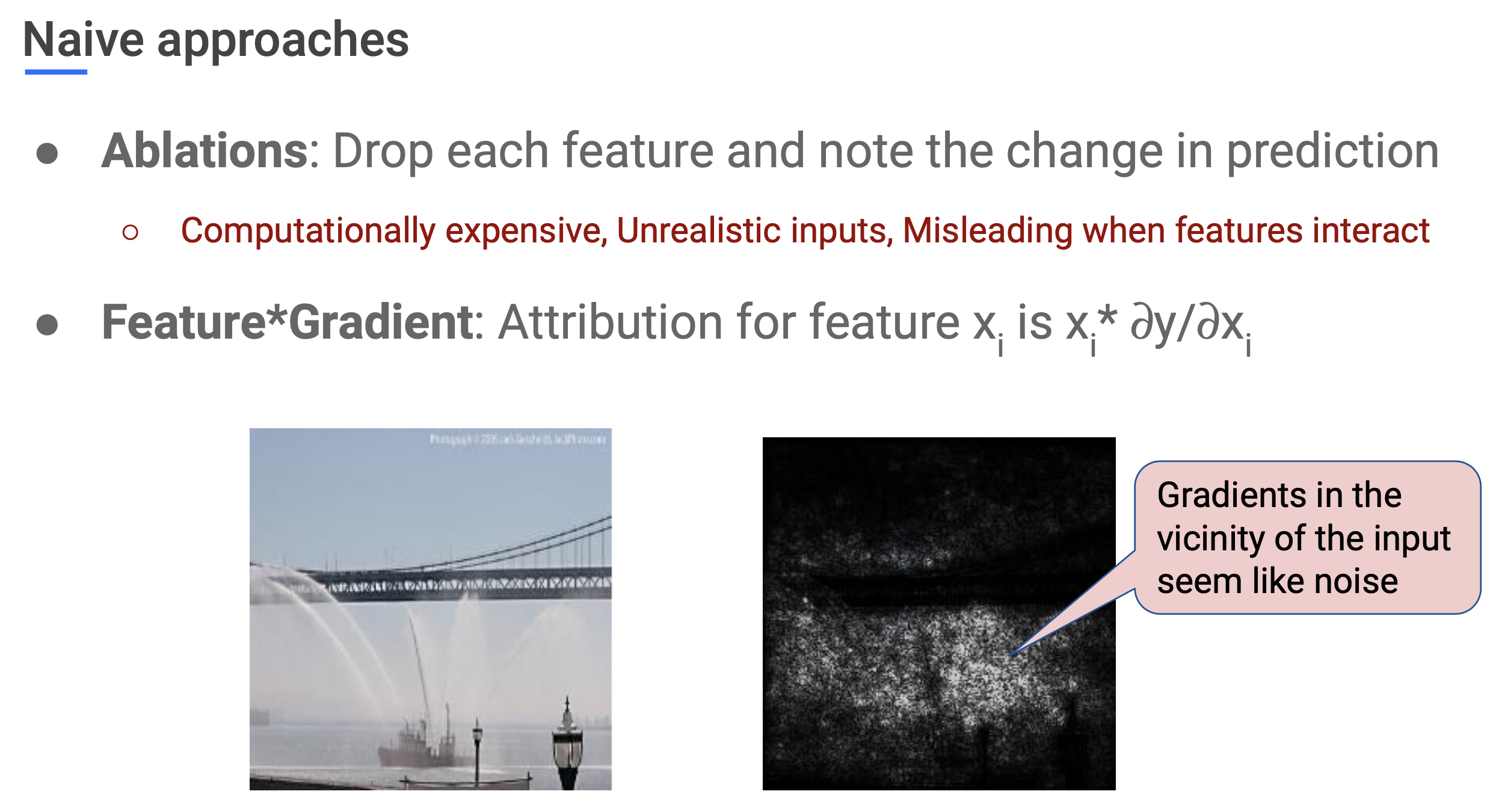

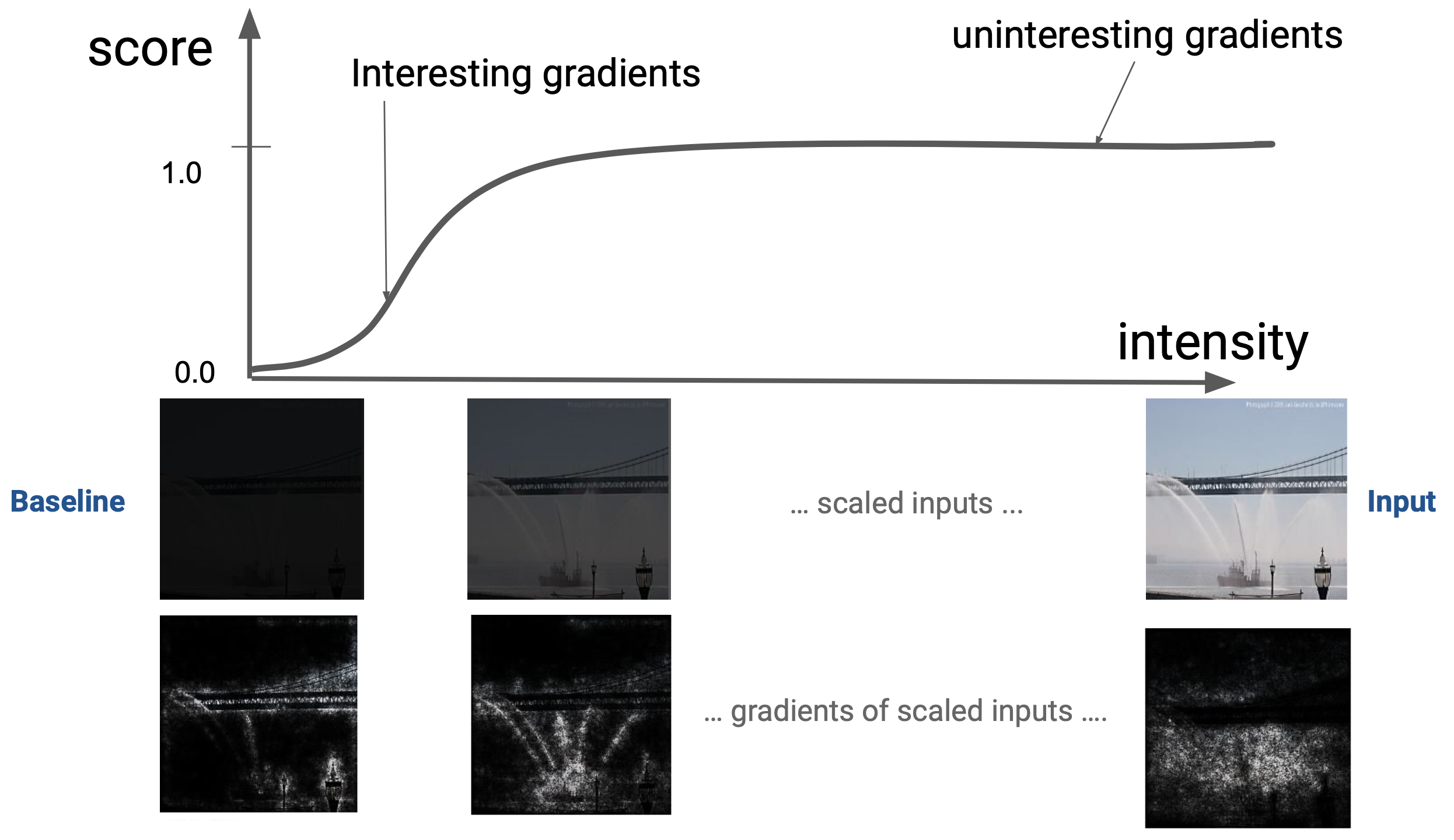

Fig.

Fig.

Fig.

Fig.

Fig.

Fig.

Fig.

Fig.

Various Explanation Methods

Rethinking Attention-Model Explainability through Faithfulness Violation Test 라는 논문에서는 모델이 왜그런 결과를 냈는지를 해석하는 걸 IG를 포함한 다양한 기존의 방법론들로 비교해봤습니다.

Generic Attention-based Explanation Methods

- Inherent Attention Explanation (RawAtt)

- Attention Gradient (AttGrad)

- Attention InputNorm (AttIN)

Transformer-based Explanation Methods

- Partial Layer-wise Relevance Propagation (PLRP)

- Rollout

- Transformer Attention Attribution (TransAtt)

- Generic Attention Attribution (GenAtt)

Gradient-based Attribution Method

- Input Gradient (InputGrad)

- Integrated Gradients (IG)

References

- Papers

- Others (Blogs and Videos)

- Libraries